Implementing Real-Time Face Blur with FastAPI and MTCNN: A Step-by-Step Guide Introduction

In this step-by-step tutorial, we’ll guide you through the process of developing a FastAPI web service that utilizes the MTCNN (Multi-task Cascaded Convolutional Networks) model for real-time face detection. Our main goal is to implement a face blur feature using FastAPI, TensorFlow, OpenCV, and the MTCNN library.

Technologies Utilized

- FastAPI: A high-performance web framework designed for API development using Python 3.7+ and grounded in standard Python type hints.

- TensorFlow: An open-source machine learning framework renowned for its versatility and scalability.

- MTCNN (Multi-task Cascaded Convolutional Networks): A deep learning model widely used in face detection scenarios.

Project Setup

Before we begin, make sure you have the necessary packages installed. Open your terminal and run the following commands:

pip install fastapi uvicorn[standard] opencv-python tensorflow mtcnn- FastAPI: The core web framework.

- uvicorn[standard]: The ASGI server to run FastAPI applications.

- opencv-python: The OpenCV library for image processing.

- tensorflow: The TensorFlow library for machine learning.

- mtcnn: The MTCNN library for face detection.

Project Structure

The project is organized around a FastAPI application featuring an endpoint to handle image uploads. The key components include:

apply_blurFunction: This function takes an image and a specified blur depth, utilizes the MTCNN model to identify faces, and applies a Gaussian blur to each detected face.- FastAPI Endpoint (

/filters/blur): This endpoint handles image uploads, validates the uploaded file's content type, decodes the image using OpenCV, applies the blur using theapply_blurfunction, and returns the processed image.

CODE

import tensorflow as tf

from fastapi import FastAPI, File, UploadFile, HTTPException, Form

from fastapi.responses import StreamingResponse

from io import BytesIO

from mtcnn import MTCNN

import numpy as np

import cv2

"""

* Optional

* This part is set to be processed only by the CPU.

* If you are using a supported graphics card and will do the

* processing via this graphics card, you can remove this part.

"""

tf.config.set_visible_devices([], 'GPU')

"""

#ReadMe

* repositories : ['mtcnn':'face detection', 'tensorflow':'core face detection']

"""

app = FastAPI()

"""

@description: we used https://github.com/ipazc/mtcnn repository

"""

def apply_blur(image, depth):

detector = MTCNN()

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

faces = detector.detect_faces(image)

for face in faces:

x, y, w, h = face['box']

face = image[y:y + h, x:x + w]

blurred_face = cv2.GaussianBlur(face, (47, 47), depth)

mask = np.zeros_like(face)

cv2.circle(mask, (w // 2, h // 2), min(w, h) // 2, (255, 255, 255), -1)

face[np.where(mask)] = blurred_face[np.where(mask)]

image[y:y + h, x:x + w] = face

return cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

@app.post("/filters/blur")

async def image_upload(

file: UploadFile = File(..., description="Uploaded File"),

depth: int = Form(30, title="Depth", description="Blur Depth", ge=0, le=100)

):

content_type = file.content_type

if content_type not in ["image/jpeg"]:

raise HTTPException(status_code=422, detail={"error": str("Invalid content type")})

file_content = await file.read()

i_buffer = np.frombuffer(file_content, np.uint8)

image = cv2.imdecode(i_buffer, cv2.IMREAD_COLOR)

processed_image = apply_blur(image, depth)

_, img_encoded = cv2.imencode('.jpg', processed_image)

return StreamingResponse(BytesIO(img_encoded.tobytes()), media_type="image/jpeg")Running the FastAPI Application

Once you have the packages installed, run the following command in your terminal to start the FastAPI application:

uvicorn your_script_name:app --reloadReplace your_script_name with the name of the Python script containing your FastAPI application code.

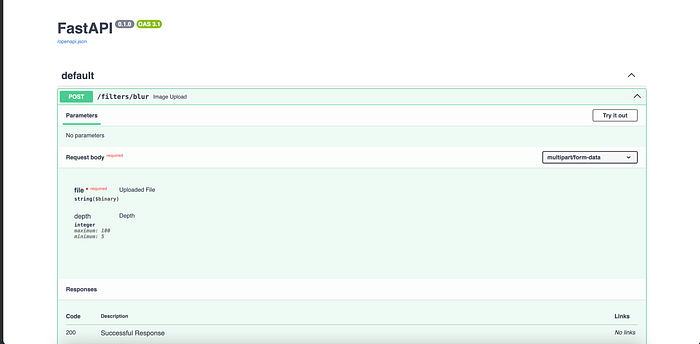

Try

Goto : localhost:8000/docs

Examples

Depth : 5

Depth : 25

Depth : 75

Depth : 100

Face Detection and Blurring Mechanism

Face Detection using MTCNN

The MTCNN library is crucial for face detection. The apply_blur function begins by converting the image to RGB format and leveraging MTCNN to identify faces. The coordinates of each detected face are extracted, and a circular mask is applied to encompass the facial region. Subsequently, a Gaussian blur is executed within the circular mask, effectively concealing facial features.

FastAPI Endpoint Workflow

The FastAPI endpoint (/filters/blur) orchestrates the image upload process. It validates that the uploaded file adheres to the correct content type, decodes the image using OpenCV, and employs the apply_blur function to process the image with the specified blur depth. The resultant image is then encoded in JPEG format and served as a streaming response.

Conclusion

This step-by-step guide provides a practical demonstration of seamlessly integrating MTCNN for real-time face detection and implementing a blur filter on uploaded images using FastAPI. You are now equipped to extend this project with additional image processing functionalities or integrate it into larger applications requiring facial anonymization features.

References:

Feel free to explore the documentation of each package for more in-depth information on their functionalities and configurations. Happy coding!